- Stricter controls for the Darwin Core occurrenceID to improve stability of record level identifiers network wide

- Ability to support Microsoft Excel spreadsheets natively

- Japanese translation thanks to Dr. Yukiko Yamazaki from the National Institute of Genetics (NIG) in Japan

The most significant new feature that has been added in this release is the ability to validate that each record within an Occurrence dataset has a unique identifier. If any missing or duplicate identifiers are found, publishing fails, and the problem records are logged in the publication report.

This new feature will support data publishers who use the Darwin Core term occurrenceID to uniquely identify their occurrence records. The change is intended to make it easier to link to records as they propagate throughout the network, simplifying the mechanism to cross reference databases and potentially help towards tracking use.

Previously, GBIF has asked publishers to use the three Darwin Core terms: institutionCode, collectionCode, and catalogNumber to uniquely identify their occurrence records. This triplet style identifier will continue to be accepted, however, it is notoriously unstable since the codes are prone to change and in many cases are meaningless for datasets originating from outside of the museum collections community. For this reason, GBIF is adopting the recommendations coming from the IPT user community and recommending the use of occurrenceID instead.

Best practices for creating an occurrenceID are that they (a) must be unique within the dataset, (b) should remain stable over time, and (c) should be globally unique wherever possible. By taking advantage of the IPT’s built-in identifier validation, publishers will automatically satisfy the first condition.

Ultimately, GBIF hopes that by transitioning to more widespread use of stable occurrenceIDs, the following goals can be realized:

- GBIF can begin to resolve occurrence records using an occurrenceID. This resolution service could also help check whether identifiers are globally unique or not.

- GBIF’s own occurrence identifiers will become inherently more stable as well.

- GBIF can sustain more reliable cross-linkages to its records from other databases (e.g. GenBank).

- Record-level citation can be made possible, enhancing attribution and the ability to track data usage.

- It will be possible to consider tracking annotations and changes to a record over time.

The IPT 2.1 also includes support for uploading Excel files as data sources.

Another enhancement is that the interface has been translated into Japanese. GBIF offer their sincere thanks to Dr. Yukiko Yamazaki from the National Institute of Genetics (NIG) in Japan for this extraordinary effort.

In the 11 months since version 2.0.5 was released, a total of 11 enhancements have been added, and 38 bugs have been squashed. So what else has been fixed?

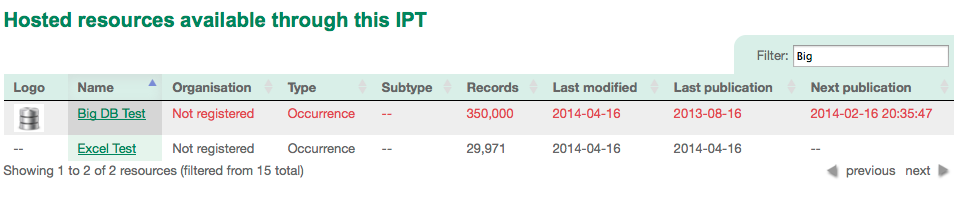

If you like the IPT’s auto publishing feature, you will be happy to know the bug causing the temporary directory to grow until disk space was exhausted has now been fixed. Resources that are configured to auto publish, but fail to be published for whatever reason, are now easily identifiable within the resource tables as shown:

If you ever created a data source by connecting directly to a database like MySQL, you may have noticed an error that caused datasets to truncate unexpectedly upon encountering a row with bad data. Thanks to a patch from Paul Morris (Harvard University Herbaria) bad rows now get skipped and reported to the user without skipping subsequent rows of data.

As always we’d like to give special thanks to the other volunteers who contributed to making this version a reality:

As always we’d like to give special thanks to the other volunteers who contributed to making this version a reality:

- Marie-Elise Lecoq, and Gallien Labeyrie (GBIF France) - Updating French translation

- Yu-Huang Wang (TaiBIF, Taiwan) - Updating Traditional Chinese translation

- Nestor Beltran (Colombian Biodiversity Information System (SiB)) - Updating Spanish translation

- Etienne Cartolano, Allan Koch Veiga, and Antonio Mauro Saraiva (Universidade de São Paulo, Research Center on Biodiversity and Computing) - Updating Portuguese translation

- Carlos Cubillos (Colombian Biodiversity Information System (SiB)) - Contributing style improvements

Great move on enforcing unique identifiers within the dataset. Dataset level unique id's are both common and stable. For example, i can look up collector's specimen identifiers from over 100 years ago and still link them to field notebooks as long as i know what dataset or collection it comes from. Something GBIF should seriously consider is coupling the locally unique identifier with a dataset level identifier, which would allow providers to focus on just the IDs within their dataset (something most field biologists do very well already) while making the IDs globally unique through the publishing mechanism. One implementation that supports just this is available through California Digital Library (see https://wiki.ucop.edu/display/Curation/ARK+Suffix+Passthrough).

ReplyDeleteThanks JD. I just want to mention that we are doing exactly that ("considering") and using a service such as the EzID approach is one option (they support ARKs and DOIs). It is the intention to make single records traceable and resolvable. We do seek to have DOIs for datasets for citation reasons, so need to consider where the suffix passthrough approach would work with DOIs consistently (e.g. all resolvers) or not, or whether we need to consider DOI as well as ARK or similar. Some discussion on DOIs exists on http://www.canadensys.net/2012/link-love-dois-for-darwin-core-archives.

DeleteJust for info: our current target is a next major IPT release in Q4 2014, which would address this topic among others.

There are several places where I could ask this question, but here it might be discovered by the most people.

ReplyDeleteAssuming a dataset populates institutionCode, collectionCode, catalogueNumber, datasetID, datasetName and occurrenceID, what parts is the GBIF harvesting mechanism using to create an identifier for a record, and in what order? I'm asking because we added collectionCode as a hack to allow indexing. I think that field is no longer required (?) and I'd like to know what parts I can change/remove without affecting the IDs.

Good question Peter.

ReplyDeleteGBIF have used the registered dataset key (the UUID assigned by the registry) in combination with institutionCode, collectionCode and catalogNumber in the past (datasetID, datasetName never came in to play)

Imagine yesterday GBIF indexed a record with the triplet of institutionCode (A), collectionCode (B), and catalogNumber (C) and created a record in the index (record 1). GBIF now know that A:B:C -> record 1 and subsequent indexing will update that record.

Now consider today you add an explicit occurrenceID (X). When GBIF harvest the record, they will notice:

occurrenceID: X

catalogNumber: C

collectionCode: B

institutionCode: A

GBIF will start by looking for any "occurrenceID:X" and find none.

GBIF will then lookup up "A:B:C" and find the record to update. Additionally, GBIF will store "occurrenceID:X" -> record1.

Future harvests of this will always find it under the occurrenceID first, and at that point one could consider removing those "hacks". If one was to remove them immediately it would result in the records not being found and thus new ones being created in the index which is not desirable.

Thanks Tim, I understand. Two additional questions:

Delete1) You mentioned GBIF used the registered datasetKey + A:B:C in the past. I assume the registered datasetKey is still used, now in combination with occurrenceID (and if not there, with A:B:C)?

2) How would we know when it's save to remove the "hacks"? Any index log we can consult?

1) Yes, since occurrenceID can be locally unique in the dataset, so datasetKet + occurrenceID = globally unique. Of course a stable globally unique occurrenceID (e.g. UUID) is a better option for reasons I am sure you already know (linkability, traceability etc).

Delete2) Afraid other than inspecting the portal or using the GBIF helpdesk mail, it is not easily possible. An open ticket for crawling alerts exists, but has not bubbled up the priority list yet and is probably still some weeks down.

I actually think we should for the future restrict catalogNumber and collectionCode to museum collections and not abuse them for observational datasets. This is under discussion right now with the new MaterialSample term that is suggested to be used for specimen data.

ReplyDeleteTo me that makes sense. There are probably other fields that should be removed too (e.g. typeStatus). In addition, there are plenty of terms which seem specific to the taxon core that are in the occurrence core (which currently has all core fields). I'm also in favour of removing those: it's confusing.

Delete