This post continues the series of posts that highlight the latest updates

on the GBIF Registry.

To recap, in April 2011 Jose Cuadra

wrote The

evolution of the GBIF Registry, a post that provided a background to the GBIF

Network, explained how Network entities are now stored in a database instead of UDDI

system, and how it has a new web

application and API.

Then a month later, Jose wrote another post entitled 2011 GBIF Registry Refactoring that was more technical in nature and detailed a new set of technologies chosen to improve the underlying codebase.

Now even if you have been keeping an eye on the GBIF Registry, you probably missed the most important improvement that happened in September 2012: the Registry is now dataset-aware!

To be dataset-aware, means that the Registry is now aware of all the datasets that exist behind DiGIR and BioCASE endpoints. Just in case the reader isn't aware, DiGIR and BioCASE are wrapper tools used by organizations in the GBIF Network to publish their datasets. The datasets are exposed via an endpoint URL, and there can potentially be thousands of datasets behind a single endpoint.

Traditionally, the GBIF Registry knew about the endpoint but not about its datasets. It was then the job of GBIF's Harvesting and Indexing Toolkit (HIT) to discover what datasets existed behind the endpoint, harvest all their records, and index those records into the GBIF Data Portal.

Therefore if you ever visited the GBIF Data Portal and viewed the Portal page for the Academy of Natural Sciences, you would find that it has 3 datasets.

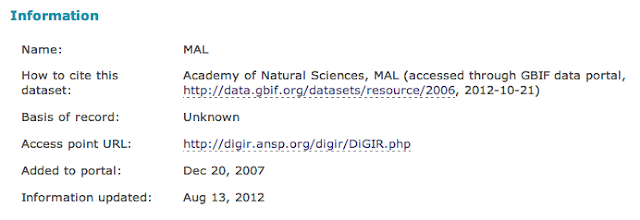

Clicking on each one, reveals that they are all exposed via the same DiGIR endpoint (see "Access point URL") - see below:

But, if you visited the GBIF Registry and did the same search for the Academy of Natural Sciences, prior to the Registry being dataset-aware, you would have seen it has a DiGIR endpoint, but not found it has any datasets!

Now that the GBIF Registry is dataset-aware, however, the Registry page for the Academy of Natural Sciences shows that the organization owns 3 datasets, and has a (DiGIR) Technical Installation.

So that's fantastic, now the GBIF Registry knows about 1000s of datasets that only the GBIF Data Portal knew about before. But how was dataset-awareness achieved?

First, the Registry now does the job of dataset discovery that the HIT used to do. A project called the registry-metadata-sync was created to do this.

Second, a special set of scripts was written to migrate all the datasets from the GBIF Data Portal index database, into the Registry database. For the first time, all datasets that existed in the GBIF Data Portal now exist in the GBIF Registry, and can be uniquely identified by their GBIF Registry UUID!

Third, the HIT was branched, creating a revised version of the tool that was able to understand the new dataset-aware Registry. The HIT also had to be modified to allow its operators to still trigger dataset discovery by technical installation. Life just got easier for the HIT though, since it could use each dataset's GBIF Registry UUID to uniquely identify each dataset during indexation.

Indeed, the dataset-aware Registry allocates a UUID to each dataset. This is fundamentally the biggest advantage that the dataset-aware Registry brings. Now that GBIF has succeeded in uniquely identifying each Dataset in its Registry, it is now working to assign each Dataset a Globally Unique Identifier (GUID) in the form of a Digital Object Identifier (DOI). The DOI for a dataset will be resolvable back to the GBIF Registry, and could be referenced when citing a Dataset, thereby enabling better tracking of Dataset usage in scientific publications.

GBIF is really excited about being able to provide publishers a DOI for each of their dataset. Keep an eye on our Registry in the coming months for their grand appearance.