By targeting data locality, full table scans of HBase using MapReduce across 373 million records are reduced from 19 minutes to 2.5 minutes.

We've been posting some blogs about HBase Performance which are all based on the PerformanceEvaluation tools supplied with HBase. This has helped us understand many characteristics of our system, but in some ways has sidetracked our tuning - namely investigating channel bonding to help increase inter machine bandwidth believing it was our primary limitation. While that will help for many things (e.g. the copy between mappers and reducers), a key usage pattern involves full table scans of HBase (spawned by Hive) and in a well setup environment network traffic should be minimal for this. Here I describe how we approached this problem, and the results.

The environment

We run Ganglia for cluster monitoring (and ours is public) and Puppet to provision machines. As an aside, without these tools or an equivalent I don't think you can sanely hope to run HBase in production. Here we are using the 6 node cluster, where each of the 6 slaves run a TaskTracker, DataNode and RegionServer, and each machine is Dell R720xd, with 64GB memory, 12xSATA 1TB drives with dual 6 core hyper threading CPUs. Quoting the user list: "HBase should be able to stretch its legs with this hardware".

Baseline

As a naive person getting access to the new cluster I started by creating the HBase table, mounted a Hive table on it, and populated it with a select using data from a CSV file.

INSERT OVERWRITE occurrence_hbase SELECT * FROM occurrence_hdfs.

The first good news was that this ran in only 2.5hrs to load up 367 million records with no pre splitting of the table and no failures (normally we use bulk loading tools but here I was feeling lazy). I then crafted a super simple MR job based on TableMapReduceUtils that took an unfiltered Scan, and a Mapper that did nothing but increment a counter with the number of rows read. This is a decent replica of what Hive would do when doing a range query (Hive does not do predicate push down to HBase with filters except for equality filters at the moment). The first run took 19 minutes. This test was across 200GB data (uncompressed) spread across 84 hard drives and 72 hyper threading cores reading it, so I knew it was too slow. We started digging... here you find some insights as to how we approached the task.

Improvement #1: Host name versus IP address

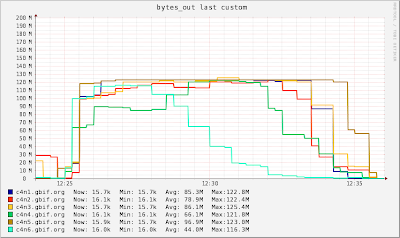

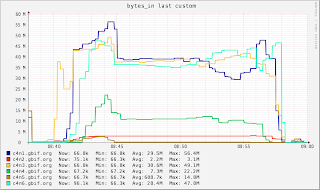

On an early run, we see the following on the Ganglia bytes_out:

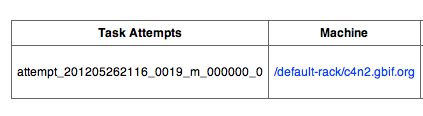

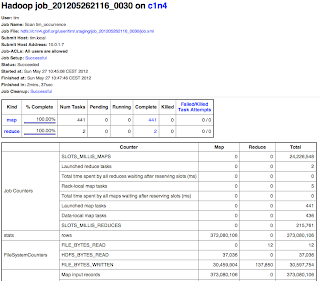

Here we see what we have seen many times - saturating our network. But why? These are Mappers that should be hitting local RegionServers using local drives (12 of them). Looking at the number of data-local mappers spawned, we see that we have very low data-local map tasks:

These actually refer to the same machine, but one is using the IP address, and the other the host name.

HBase is using the TableInputFormat which is returning the IP (thanks Stack for pointing this out). Now, it is important to note that this code is executed on the client calling machine, as it prepares a job for submission to the cluster. In my setup I was running over VPN from my laptop which was providing IP addresses for the region locations. The code in the TableInputFormatBase for our version of HBase was:

But on my laptop, a getHostname() on these machines always returned the IP address. Moving my code onto the cluster and launching from there solved this issue.HBase is using the TableInputFormat which is returning the IP (thanks Stack for pointing this out). Now, it is important to note that this code is executed on the client calling machine, as it prepares a job for submission to the cluster. In my setup I was running over VPN from my laptop which was providing IP addresses for the region locations. The code in the TableInputFormatBase for our version of HBase was:

String regionLocation = table.getRegionLocation(keys.getFirst()[i]).getServerAddress().getHostname();

Improvement #2: Ensure HBase is balanced

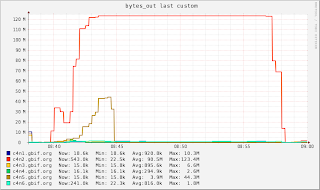

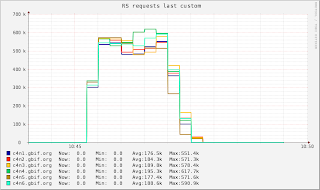

When starting these tests there were 2 tables on HBase. HBase reported it was nicely balanced, major table compactions were done. On running however, we still see a huge bottleneck again shown clearly with bytes_out, bytes_in and region server requests:

Again the network saturation is clear, but 1 region server is getting hammered, and many machines are receiving data.

Why? Well, we run HBase 0.90 which does balancing across all tables and not on a per table basis. Thus, while HBase saw it's regions evenly distributed across the servers, one machine was hot spotted for one table. This is fixed in HBase 0.94, but we don't run that yet and ganglia clearly shows us this is a limitation. Again, MR is spawning jobs on other machines all hitting one RS and saturating the network. Fortunately I could delete the unused table and rebalance the lot.

Miscellaneous improvements

Somewhere along the line I saw exceptions reporting timeouts and things like this:

48 Lease exceptions org.apache.hadoop.hbase.regionserver.LeaseException: org.apache.hadoop.hbase.regionserver.LeaseException: lease '7961909311940960915' does not exist at org.apache.hadoop.hbase.regionserver.Leases.removeLease(Leases.java:230) at org.apache.hadoop.hbase.regionserver.HRegionServer.next(HRegionServer.java:1879) at sun.reflect.GeneratedMethodAccessor15.invoke(Unknown Source) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:25) at java.lang.reflect.Method.invoke(Method.java:597) at org.apache.hadoop.hbase.ipc.HBaseRPC$Server.call(HBaseRPC.java:570) at org.apache.hadoop.hbase.ipc.HBaseServer$Handler.run(HBaseServer.java:1039)

Basically the HBase client is not reporting to the master quickly enough, and the master kills the client, and thus the task attempt fails. The result is the JobTracker goes and spawns another task attempt, and here we often observed it was not a map-local attempt - everything we know we need to avoid for performance. The following were done to address this:

- Set the hbase.regionserver.lease.period to 600000, up from 60000. This was then the same as the TaskTracker timeout, so HBase would timeout the scan client in the same duration that the JobTracker would timeout the Task attempt anyway

- Reduce the number of mappers from 44 to 36 on each machine. Here we just ran a few runs, observed Ganglia load averages etc, repeated with different configuration and ultimately tuned the Mapper count to the point where no exceptions were thrown. There was no magic recipe to this, other than "rinse and repeat". This is where Puppet is gold.

The final run

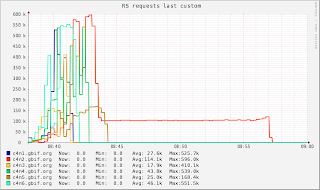

With a balanced HBase environment, and resolving the IP / Host issue, the MR result, and ganglia bytes_in, bytes_out and region server requests are shown:

We still see 5 mappers running across the network, which are due to the FairScheduler deciding it has waited long enough for a data local mapper and spawning another - we might investigate if we want to increase this wait time.

A final thought

All these tests used no Filter in the Scan, thus the entire data was passed from the region server to the mapper. Adding a filter such as the following, reduces the time to around 90 secs.

scan.setFilter(new SingleColumnValueFilter(

"v".getBytes(),

"scientific_name".getBytes(),

CompareOp.EQUAL,

"Abies alba".getBytes()));

We are using Hive 0.90 on HBase 0.90 and I believe Hive will push down the "equals" predicates to HBase, which will benefit from these kind of filters. However, I wanted to run the tests without them, as we will often do range scans, and custom UDFs to do things like point in polygon checking.

Thanks to everyone on the mailing lists for all their support through this. You all know who you are, but in particular thanks to Lars George and Stack. All GBIF work is open source, and we are committed to open data - we always welcome collaborations.